The Task of the Scientist

Abstract for this paper found here: https://open.substack.com/pub/bergsonsghost/p/abstract-the-task-of-the-scientist

Translation is a form.1 To comprehend it as a form, one must go back to the original, for the laws governing the translation lie within the original, contained in the issue of its translatability. The question of whether a work is translatable has a dual meaning. Either: Will an adequate translator ever be found among the totality of its readers? Or, more pertinently: Does its nature lend itself to translation and, therefore, in view of the significance of this form, call for it?2

Walter Benjamin

“The Task of the Translator”

What language does nature speak? Does it lend itself to translation and call for it? If, as Benjamin argues, the laws governing a translation lie within the original, then what laws does nature give us, and how do they govern nature’s translation? What is the nature of nature?

Of course, Benjamin is not concerned with nature in his well known essay “The Task of the Translator.” His focus is explicitly on the translation of human texts from one language to another. But central to that project is a deeper distinction, one that quietly structures his entire understanding of human expression: for Benjamin, what distinguishes human life is not its biological existence, but its capacity to signify, to represent something beyond itself. This distinction is subtle but worth reflecting on. Benjamin writes, “All purposeful manifestations of life, including their very purposiveness, in the final analysis have their end not in life, but in the expression of its nature, in the representation of its significance.” (1996: 255). In other words, for Benjamin, the value of human life is not found in its biological functions or natural origin, but in how it comes to represent something beyond itself, how life is given meaning. This meaning, importantly, does not come from nature. Nature may overflow with information, what we call data or facts, but it offers no intrinsic guidance, no value, no moral direction. It does not speak in the way human language speaks. For Benjamin, meaning is found instead in “the more encompassing life of history,” and understanding what he means by history—as opposed to nature—is crucial to understanding the full scope of his argument. He writes:

The idea of life and afterlife in works of art should be regarded with an entirely unmetaphorical objectivity. Even in times of narrowly prejudiced thought there was an inkling that life was not limited to organic corporeality. But it cannot be a matter of extending its dominion under the feeble scepter of the soul, as Fechner tried to do, or, conversely, of basing its definition on the even less conclusive factors of animality, such as sensation, which characterize life only occasionally. The concept of life is given its due only if everything that has a history of its own, and is not merely the setting for history, is credited with life. In the final analysis, the range of life must be determined by the standpoint of history rather than that of nature, least of all by such tenuous factors as sensation and soul. The philosopher’s task consists in comprehending all of natural life through the more encompassing life of history. And indeed, isn't the afterlife of works of art far easier to recognize than that of living creatures? (1996: 254-255, emphasis added)

Here, Benjamin draws a sharp distinction between biological life and historical life. History, understood as the unfolding of human culture, language, and creative production, offers a perspective on life that exceeds the rhythms of nature. Societies, cultures, and works of art possess a revealing and paradoxical trait: they often outlive the people that created them. The "life" of a cultural form is fundamentally different from the life of an animal species. It does not follow the cycles of biological replication and death, but evolves through interpretation, transmission, and transformation. For Benjamin, it is this broader, “more encompassing life of history” that should inform our understanding of life itself. Not the other way around.

What is Benjamin getting at here? How can life be rooted in something that has no biological body and is not bound to nature’s cycles? In one sense, his point is intuitive and obvious: the history and culture of humankind far exceed any single biological life. We are all born into a history that precedes us and, with any luck, will continue long after we are gone. This history shapes our language, our institutions, our very sense of what is possible. The meaning we assign to our lives is inseparable from the historical and cultural frameworks we inherit—frameworks that shape our values, goals, and self-understanding.3

Consider how differently life is interpreted across time. People born into the ancient world were guided by myth, divine law, and cosmological hierarchies that gave their lives a kind of sacred coherence. By contrast, modern life is shaped by ideals like individualism, scientific rationality, and technological progress. In each case, history provides the lens through which life is lived and understood extending well beyond any biological lifespan.

Even science is not exempt from history. Galileo’s discovery of Jupiter’s moons, for example, had a meaning specific to his historical moment. It challenged the prevailing geocentric worldview, revealing that not everything that moved in the heavens only orbited Earth thereby lending support to Copernicus’ heliocentric model. What mattered was not only what Galileo saw, but when and how it resonated with the symbolic order of his time. This scientific observation helped rupture one historical framework and inaugurate another, laying the groundwork for the modern worldview.4

And yet, from the standpoint of science, this dependence on historical context should be unsettling. Is the meaning of science found in its relationship to the history it emerges from, or in the nature it seeks to describe? What, ultimately, is the difference between history and nature?

One way to understand this difference is to examine history and science’s respective relationship to language. History, as a discipline, is inherently intra-linguistic, a realm constructed and explored through the medium of language itself. Historians grapple predominantly with texts, narratives, and interpretations, bringing language to bear on language to reconstruct and understand the past. Nature, conversely, appears extra-linguistic. The physical world exists independently of human language, and while scientists use language to describe, analyze, and theorize about natural phenomena, nature itself does not "speak" in human tongues. Thus, the scientist brings language to the non-linguistic, attempting to understand the universe's workings through observation, experimentation, and the construction of explanatory frameworks. This crucial difference, one working within the confines of language, the other striving to bridge the gap between language and the physical world, shapes the methodologies and epistemologies of both fields.

Initially this divide between history and science, between the intra-linguistic and the extra-linguistic, seems stable and self-evident. History is the domain of words, texts, and meanings; science, the domain of facts, measurements, and the mute regularities of the natural world. Each discipline appears to operate within its own logic: one interpretive, the other empirical. But Benjamin’s thinking on translation quietly and then forcefully unsettles this distinction. What begins as a focused inquiry into the problem of translating human texts soon opens onto something far more expansive. In the seemingly narrow question of how one language carries over into another, Benjamin uncovers a kind of linguistic activity that defies disciplinary boundaries altogether, one that hints at a deeper unity between language, history, and nature, and calls into question the very terms by which we separate them.

At the center of this destabilization is Benjamin’s radical rethinking of what translation reveals. For him, translation is not merely a technical exercise in converting meaning from one language to another. It exposes something far deeper: that all languages, in their very structure, are reaching beyond themselves, straining toward an expression that no single tongue can fully articulate. Benjamin calls this horizon reine Sprache, or pure language. It is not a literal language—neither English, nor German, nor Mandarin, nor Hindi—but a conceptual ideal: the idea that every language carries within it a fragmentary intention, a gesture toward a shared meaning that exists not in any one system, but in the resonance between them. In using the unfamiliar reine Sprache, Benjamin means to echo the familiar reine Mathematik (pure mathematics). Pure language is to every day language as pure math is to applied math: a kind of idealized, structural possibility rather than a lived, pragmatic tool. Pure language is not a grammar or a code, but the silent drive that gives rise to language itself. The structuring impulse behind all acts of expression that seek to achieve the complete and final word.

How can pure language be both the structuring impulse behind expression (a kind of origin) and also the conceptual horizon toward which all language strains (a kind of destination)? Because they are reflections of the same tension from different angles. Language is born from a double bind: it arises from a primal need or impulse to communicate—to externalize inner states, intentions, emotions, and ideas—but it is always already haunted by the impossibility of complete re-presentation. The sign is never the actual feeling of hunger, or the actual thing named; it is a stand-in, a proxy. As Benjamin suggests, every act of language is haunted by a deeper “pure” meaning it can never fully retrieve, what he calls the horizon that all speech gestures toward but can’t attain.

This horizon exists simultaneously at two scales: the individual and the collective. On the individual level, expression is always incomplete because no one can fully translate the fullness of inner experience into language; something is always lost, distorted, or left behind. But at the collective level, language becomes a shared archive of attempts—a distributed project in which every utterance, every formulation, is a fragment oriented toward a common (if unreachable) whole, a more expansive, if still partial, map of reality. For Benjamin, this means that every act of expression—whether in science, literature, or ordinary speech—is part of a larger, collective effort to name a reality that exceeds any one perspective. No single language holds the key; each is only a fragment, a partial attempt to articulate what cannot be fully captured in isolation. Pure language, then, is not a mystical doctrine but a structural insight: it names the condition of linguistic incompleteness, and the shared direction in which all languages point. It is the unreachable whole toward which every utterance, every translation, gestures—never arriving, but always orienting.

All suprahistorical kinship between languages consists in this: in every one of them as a whole, one and the same thing is meant. Yet this one thing is achievable not by any single language but only by the totality of their intentions supplementing one another: the pure language. (1996: 256–257)

In this view, language is not merely a tool for transmitting information, but a living system engaged in an ongoing struggle with the limits of expression. It revises itself, translates itself, and tests new forms. Not to reach perfect clarity, but to approximate a truth that always exceeds its grasp. Scientific language is no exception. Theories and equations are not mirrors of reality; they are models—simplified, structured representations shaped by historical context and conceptual frameworks. Just as multiple scientific models can describe facets of a phenomenon without ever exhausting it, so too do different languages approach the same underlying meanings from distinct angles. They are like diverse instruments tuning to the same resonance. Individually imperfect and partial, yet collectively illuminating.5 The map is not the territory, but each map is drawn by a yearning for a fuller expression of the real, a shared but unreachable whole that all our languages, models, and theories are endlessly trying to approach.

Benjamin uses a striking metaphor to describe this shared whole: each language is like a shard of a broken vessel, and pure language is the imagined whole, the original prelinguistic unity from which all languages have fractured. Translation, then, is not just about converting words from one language to another. It is an effort to let these fragments resonate with one another, to gesture toward the structure they once belonged to even if that structure can never be fully restored.

In this pure language—which no longer means or expresses anything but is, as expressionless and creative Word, that which is meant in all languages—all information, all sense, and all intention finally encounter a stratum in which they are destined to be extinguished. (1996: 261)

At first glance this sounds abstract, even mystical. But what Benjamin is describing cuts to the heart of how knowledge itself operates. We tend to equate information, meaning, and intention with clarity, precision, and control—with what can be articulated, measured, or tested. But Benjamin reminds us that the most profound truths often exceed the systems we use to express them. In pure language, information is not erased, but absorbed into a more foundational stratum of prelinguistic expression—one that exists prior to distinctions like subject and object, signal and meaning. This is not mysticism, but a recognition of the structural limits of articulation and sense making. It names a tension that all meaning-making, including science, must navigate: the persistent drive toward coherence in the face of the impossibility of a total and final expression.

This tension can be seen at work in science, especially in its most ambitious moments. For what is science, if not an attempt to articulate a kind of pure language through the formal universality of mathematics and physical law? Science aims for universal laws, expressed in precise, often mathematical terms that transcend cultural and linguistic boundaries. The language of physics, for instance, aspires to describe the fundamental structure of reality in a way that anyone, anywhere, regardless of native tongue or cultural background, could in principle understand. Equations, symbols, standardized units—these are not merely tools of communication, but attempts to construct a language that speaks directly to nature itself, bypassing the messiness and ambiguity of ordinary speech. In this sense, science could be seen as enacting, even if unintentionally, a version of Benjamin's “kinship of languages”—a convergence toward a shared code, a universal form of expression.

But this analogy reveals a deeper tension. If nature is the “original text” that science translates, then what kind of text is it? In Benjamin’s account, translation is not a relationship between a text and an external reality; it is a relationship between languages. Translation presupposes a prior expression, not a mute substrate. So is science translating nature or is it constructing a language that lets nature appear as if it were already speaking? To answer this question we must distinguish between information and meaning.

The Weight of What is Not Said - Information vs Meaning

How can we distinguish information from meaning? The two are often conflated, but they are not the same. Information is physical; meaning is not. While information may shift form—from text to voice, or from ink to code—it must always be instantiated physically. Any attempt to communicate anything, no matter how poetic or profound, must take on a physical form to be communicated.

Take a Shakespearean sonnet: it can be printed on a page, spoken aloud, typed into a text message, encoded as binary data, or transmitted as radio waves. Though the form differs, they all carry the same information so long as each preserves the structure of the message: the words, their order, the punctuation. Whether the sonnet arrives as ink, sound, or code, what matters is that it arrives intact and legible.

Meaning, in contrast, is not located in the physical substrate. It emerges from the relations among information structures as perceived by a mind. Whereas information can be quantified and transmitted without understanding, meaning arises only when a mind interprets that information. Meaning emerges when a receiver (a reader, listener, viewer, etc.) engages with information and brings their history, emotions, knowledge, and expectations into play. The same sonnet might strike one reader as romantic, another as tragic, and another as ironic. A scientific paper may contain data, formulas, and defined terms—its information is precise—but the meaning of its conclusions can vary depending on who reads it, when, and why. Meaning is what happens in the space between information and interpretation. It’s contextual, unstable, layered, and always shaped by the encounter between the communication of information and its receiver. Information can be quantified; meaning must be interpreted. And while the same information may be present for every receiver, meaning is never fully fixed.6

With this distinction between information and meaning we can see that Nature provides information but not meaning. A falling leaf carries data: about gravity, wind, seasonal change, cellular decay. A fossil carries information about extinct organisms and geological time. A rainbow is a prism of atmospheric optics. But these phenomena do not mean in the way a poem or a historical document means. The leaf does not intend to speak. The rainbow is not trying to say anything. Raw data from nature carry no narrative or semantic content until interpreted by a mind or model. The falling leaf obeys physical laws but any meaning (‘summer is ending’, or ‘a message from the spirits’) is assigned by an observer.

Here is where the scientist departs from the translator. The translator begins with a human voice and tries to preserve its intention across languages. The scientist begins with a subjectless universe, with events and patterns in the physical world, and tries to build a system that renders them intelligible. Science, then, is not about translating a message from nature, as though nature were already speaking in a human tongue. Rather, it is about constructing a language that emerges from systematic engagement with regularities in the natural world. It is not language about nature; it is language through which nature becomes legible

If science is not translating messages that nature already articulates, but instead constructing a language that renders nature legible, then what governs that construction? Science may not extract meaning from nature in the way a translator would from a text, but it does extract information—it finds regularities, patterns, and relations that can be encoded into models. Nature does not speak in sentences, but it can be measured, predicted, and formalized. In this sense, the language of science operates not by conveying meaning, but by navigating informational patterns or structure. And it is here, at the level of structure rather than content, that science begins to echo Benjamin’s vision of pure language: not as a shared semantic code, but as an abstract system of relations that all expressions gesture toward, yet none can fully contain.

The distinction between physics, chemistry, and biology helps make this clearer. Physics deals in universal laws, abstract and mathematically precise, which often seem closest to a “pure” language of nature. Chemistry, though grounded in physical law, introduces emergent behaviors: molecules, reactions, and bonds that cannot be easily reduced to physics alone. Biology adds yet another layer—historical and environmental contingency, feedback systems, and symbolic complexity. Its objects—genes, cells, organisms—are shaped by evolutionary pressures and developmental context. Across all three disciplines, science does not decode nature’s inherent meaning, but instead builds models that allow us to treat nature as if it were speaking in an intelligible system. In this way, scientific modeling mirrors Benjamin’s theory of translation, not as the recovery of original content, but as the construction of a structure that gestures toward a coherence always just out of reach.

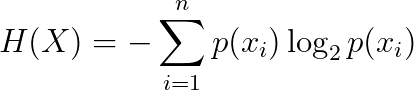

To understand the difference between scientific modeling of information and human meaning more precisely, we can turn to a major conceptual shift in 20th-century science: the rise of information theory, developed by Claude Shannon. Shannon’s framework redefined communication not in terms of meaning or interpretation, but in terms of structure and probability.7 Information, in this model, is not about what a signal says or means, but about how much uncertainty it removes. It is measured in bits, not by content, and reflects how many possible outcomes it excludes. In Shannon’s system, communication becomes a question not of expression, but of constraint: how structured, how surprising, how efficient. What matters is not what a signal means, but how much it constrains possible outcomes. Shannon called this constraint “entropy” and devised a mathematical formula which gives a precise measurement of how much information entropy exists in any given message. This is known colloquially as “Shannon entropy.”8

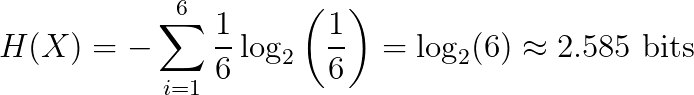

One way of explaining Shannon entropy is through a six sided die. Imagine rolling a fair, six-sided die, where each face (1 through 6) is equally likely. Before you roll, the outcome is completely uncertain: any face could appear with probability 1/6. Shannon entropy measures the "surprise" or uncertainty about which face will show up. If all outcomes are equally likely, the entropy is at its maximum for that die. For a fair, six-sided die, the Shannon entropy for a single roll is about 2.585 bits. How do we arrive at that number?

We can show this using the formula for Shannon’s entropy (in bits):

Which when we apply the dice roll, since all outcomes are equally likey (p=⅙) the entropy simplifies to:

While the mathematics of Shannon entropy may initially seem intimidating, the core idea is straightforward and intuitive: it represents the average number of yes/no questions one must ask to determine the outcome of an uncertain event—such as 'What number came up when I rolled the die? Shannon entropy measures how many “units” of yes/no questioning are needed to remove uncertainty and discover the exact outcome. A “bit” is one such unit and a bit is always 1 or 0 which effectively can be represented as a yes/no question. Is it one (yes) or zero (no)?

As an example:

1: Was the number greater than 3?

Ans: No

2: Was the number 1 or 2?

Ans: No

3: You rolled a 3

With this strategy we can see that by question 2 we have always narrowed down the answer to either a 50/50 (1 or 2) or we know the answer (3). By carefully choosing questions that roughly split the possibilities in half each time, you minimize the number of questions needed. With this strategy you find that it takes a little more than two yes/no questions, or bits, on average, to identify the rolled number. 2.585 questions/bits on average to be exact. This process illustrates, in a very practical way, what Shannon means by “entropy.” It’s the measure of how many yes/no questions (bits of information) you need to resolve the uncertainty of a random event and discover the exact outcome. Think of Shannon entropy like a thermometer for randomness. It tells you how much uncertainty you're dealing with, which in turn helps you reason about fairness, surprise, compressibility, and predictability. Knowing the Shannon entropy of a die, for example, lets you detect if it's fair, estimate how much you can compress data from its rolls, measure how predictable or surprising its outcomes are, and compare how hard it is for people or machines to guess its results.9

What makes this shift in perspective so profound is that it places entropy, a concept from thermodynamics, at the heart of both physical and symbolic systems. Shannon borrowed the term entropy to describe the informational unpredictability of messages, but in doing so, he created a conceptual bridge between two domains that had long been held apart. In thermodynamics, entropy measures the dispersal of energy, the drift from order to disorder. In information theory, it measures the uncertainty or “surprise” within a stream of data. But in both cases, entropy names a shared logic of transformation. It describes how systems change, degrade, and preserve structure in the face of loss.

At first glance, Shannon entropy and thermodynamic entropy as defined in statistical mechanics by Josiah Willard Gibbs, appear to belong to entirely different domains. Gibbs’s entropy measures the disorder or randomness within a physical system, typically at the molecular level. Shannon’s entropy, by contrast, quantifies the uncertainty in a message transmitted over a communication channel. One deals with the probabilistic distribution of particles in physical space; the other with the probabilistic distribution of symbols or signals in informational space. Yet despite these differences, both forms of entropy express a common underlying logic: they measure the constraints on what can be predicted or known within a given system.10

So while Gibbs and Shannon began from different domains—physical matter versus information—the logic they discovered converges. In statistical mechanics, entropy reflects how many microstates—distinct arrangements of particles—correspond to a single macrostate, such as a given temperature or pressure. Likewise in information theory, entropy reflects how many microstates—distinct possible messages or signal combinations—could underlie a given informational outcome, or macrostate, such as a spoken or written sentence. One deals with the hidden variability beneath stable physical conditions; the other with the variability behind communicative meaning. Yet despite these differences, both forms of entropy express a common underlying logic: they measure the limits of predictability within a system, given partial knowledge. In both cases, entropy quantifies the hidden degrees of freedom beneath an observable macrostate, or how much cannot be determined from the macro-level alone. Whether you're examining molecules in a room or symbols in a string, the key question remains the same: How many ways could this outcome have happened? Entropy quantifies what remains uncertain, what’s hidden beneath the average macrostate.

One way to distinguish Gibbs and Shannon entropy is by their domains of application: Gibbs is concerned with physical systems, including the universe treated as a thermodynamic whole, while Shannon addresses any system capable of communication. But these worlds begin to overlap if we entertain the possibility that the universe itself might be legible as a message—not in the metaphorical sense of containing a secret code, but in the structural sense of producing patterns, regularities, and compressible information. From this perspective, Gibbs and Shannon are not speaking at cross-purposes, but addressing different layers of the same problem: how to quantify constraint, structure, and possibility in a system that could always have been otherwise.

A key distinction, however, lies in how this uncertainty is grounded. Shannon entropy is dimensionless. Its units—bits (base 2) or nats (base e)—are measures of informational quantity, not physical substance. This reflects its abstract nature: it captures uncertainty in purely statistical terms, independent of material embodiment. Gibbs entropy, by contrast, is inseparable from its physical grounding. It incorporates Boltzmann’s constant kB, which links microscopic behavior to macroscopic observables like temperature. The result is an entropy expressed in joules per kelvin, anchored in the thermodynamic realities of energy and temperature, grounding it firmly in the physical domain. Where Shannon abstracts information from its medium, Gibbs insists that information is the medium—carried by particles, bound to energy, constrained by physical law.11

In this sense, Shannon entropy can be seen as a generalization of the idea Gibbs helped formalize. It extends the logic of entropy beyond the domain of physical matter to any domain where uncertainty can be measured and constrained. What began as a tool to describe the statistical behavior of atoms became, through Shannon, a framework to understand the structure of messages, the dynamics of languages, and, perhaps, the entropic foundations of meaning itself.

It is difficult to overstate the significance of this move. Like the Copernican revolution, Shannon’s generalization of entropy to include symbol making in general reorients our relation to the world and our mind. It reveals that nature and culture, matter and meaning, are not separate orders but parallel responses to a shared condition. Entropy becomes the hidden thread that runs through both physical processes and symbolic expression. It collapses the old boundary between what is and what is represented, between physical objects and symbols of those objects, between the world and our attempts to model it. In this view, meaning is not opposed to matter—it is one of the forms that matter takes when pressed against the limit of disorder. Entropy is no longer simply a measure of loss; it is the precondition of intelligibility, the background against which all pattern, all coherence, all understanding must be constructed and continually reconstructed.

To better grasp what is at stake in this conceptual convergence, it helps to return to the origin of the term entropy. “Entropy” was coined in 1865 by the German physicist Rudolf Clausius, from the Greek τροπή (tropē), meaning “transformation.” Clausius deliberately chose a word that echoed energy to highlight their deep kinship: entropy, he wrote, is the “transformation content” of a body—not a measure of substance, but of change. Remarkably, he also emphasized the need for a term that would remain stable across future languages, a gesture that resonates uncannily with Walter Benjamin’s idea of pure language. Clausius’s entropy is intended not merely as a scientific term, but as a universal concept: born in history, yet structurally invariant across time, systems, and tongues.12

Yet embedded in the very idea of transformation is a paradox: no conversion from one state to another is ever fully efficient. In thermodynamics, entropy accounts for the energy lost to heat and made unavailable for work during any process. In information theory, entropy quantifies the uncertainty or noise that disrupts the fidelity of transmission. Transformation always entails the loss of precision, structure, or context. What is preserved is never identical to what is sent; some information is inevitably dissipated or distorted.

If we follow this thread back to translation, the link becomes clearer. If translation is the transformation of one form into another, entropy ensures that transformation is never perfect. Every transmission incurs loss. Signals degrade, languages evolve, meanings drift. Entropy is what makes translation possible and also what makes it impossible to complete. It is both the condition and the limit of intelligibility.

Representation - From Reference to World Model

Having distinguished information from meaning, and having examined how Shannon entropy quantifies information as a measure of uncertainty or surprise without reference to semantic content, we now turn to the question of representation. While information, in the Shannon sense, is defined independently of meaning, it is never encountered in a raw or abstract state. For an organism, a system, or a mind to register information at all, it must be encoded, embodied, or expressed in some medium. That is, it must be represented. Representation is the necessary interface between information and any system capable of receiving or acting on it. To understand how information functions within physical, biological, or cognitive systems, we must ask: how is it made present? In what forms does it appear? What are the structures that give information its shape and thus make it intelligible?

The word information comes from the Latin informatio, meaning “a shaping” or “the act of forming,” derived from informare—in- (“into”) and formare (“to form or shape”). Originally, it referred to the act of giving form to something, whether to matter (in philosophical contexts) or to the mind (as in instruction or education). Over time, especially in English, its meaning shifted to signify communicated knowledge or data. At its root, information still carries the sense of “giving shape” to something—whether a thought, a message, or a system. Etymologically, "information" implies the act of giving shape. But that act must always occur through a form, a medium, a signal, a sign: in short, a representation. This suggests that information and representation are not separate substances or entities, but rather analytically distinct dimensions of the same event. You cannot have one without the other but you can ask different questions about them. To ask about information is to ask: What difference does this make? What can be inferred, predicted, or done based on it? Does it allow for predictions better than chance? To ask about representation is to ask: What form does this take? How is it structured, encoded, or materialized?

Representation, then, is the means by which any system, biological or artificial, encodes its relation to the world. It is how information becomes actionable, how perception gives rise to prediction, and how experience is transformed into structure. In this sense, to ask what a representation is, or how it functions, is to ask how meaning enters the world—not from above, but from within. It is the interface between noise and order, between raw data and the internal models that give it shape.

Computational models of cognition and artificial intelligence ground representation not in symbolic language but in perception. They begin with the question of how a prelinguistic embodied system makes sense of an environment in order to act.13 These models are grounded in evolutionary logic: biological agents survive and replicate by forming internal models that reduce uncertainty about the world. They achieve this by compressing physical reality into sensory data that guides actions and supports predictions better than chance.14 In this view, symbolic language is not the precondition of thought or representation but a later evolutionary development that emerges on top of these prelinguistic cognitive architectures. Meaning symbolic language is not the starting point of thought, but rather the end result of a long process of cognitive development grounded in sensorimotor experience. It externalizes and refines the brain's more fundamental capacities for simulation, compression, and abstraction.

At bottom, computational models assume that representation is fundamentally pragmatic, emerging from the functional demands of interaction with the world, rather than being defined by formal symbolic systems. This pragmatic conception of representation views representations as internal structures or dynamic patterns, that support prediction, perception, and action within an agent’s internal model of the world. After all, to act in the world is to intervene on a reality that is not fully given. This task demands more than mechanical stimulus-response behavior; it requires the ability to anticipate, to simulate, to hypothesize, and to learn from error. And this predictive behavior obviously emerges long before symbolic language.

Take the way an ordinary gut bacterium, E. coli, swims toward food. It drifts in a chaotic molecular soup and can either use its flagellum to swim straight or to tumble briefly, randomizing its direction before trying again. To choose which movement to make it uses its built-in “senses” (surface receptors) which amount to a kind of perception: they tally how many attractant molecules strike the cell each moment and retain a brief internal record of that tally. A minimal form of representation begins right here, not as a picture of the world but as a tiny internal record or memory of what matters to E. coli, a sliding average of recent concentrations. Each subsequent new sample is compared to this internal record. When the concentration trend is positive, the bacterium prolongs its straight run; when the trend turns negative, it increases tumble frequency, seeking a new heading. In effect, E. coli distills a torrent of chemical data from the surrounding molecular soup into a single binary signal—“better” or “worse”—and lets that signal steer its flagella. This is perception pared to the essentials and reveals representation as radical compression: just enough information to bias one of two actions. Millions of years before nerves, words, or equations, chemotaxis shows how life first solved the problem of knowing the world by stripping it to the minimum actionable pattern.15

Cognitive science increasingly understands this predictive capacity of organisms in terms of internal models that encode an agent's expectations about the structure and dynamics of its environment. A world model provides a compressed, partial map—a working hypothesis about the causes of sensory input. To generate novel predictions, counterfactuals, or action plans, agents also rely on generative models, internal systems capable of simulating not only what is observed but what could be observed under different conditions. While world models interpret the present, generative models envision the future, guiding purposeful action. These models are central to contemporary theories of cognition and agency, offering a computational account of how agents maintain coherence over time and act purposefully.16

From this perspective, representation is primarily a matter of adaptive modeling, not linguistic signification. It is the capacity to compress sensory input and use this compressed signal to guide action under uncertainty. Symbolic language, far from being the origin of representation, is an extension of prelinguistic capacities for pattern recognition, prediction, and abstraction. Unlike classic theories that assume symbolic language as the ground of meaning, computational accounts see it as an evolutionarily scaffolded tool—a highly structured compression built upon more basic compressions that enable inference and interaction. This framework treats representations not as words or symbols but as dynamic patterns that support prediction and action. Whether linguistic, sensory, or proprioceptive, these patterns allow agents (biological or artificial) to construct internal models of their environments, simulate possibilities, and guide behavior in real time. Representation becomes active rather than passive, predictive rather than descriptive, situated rather than abstract. In short, computational models see representation as inseparable from the embodied and situated processes that generate it.17

This reframing establishes continuity between the representational capacities of simple organisms, nonverbal children, and linguistic adults, integrating perception, action, and cognition within a single framework that does not require prior access to symbolic systems. Symbolic language, then, does not generate representation but reorganizes it by introducing new constraints, affordances, and social dynamics, while still depending on the underlying capacity of agents to build and update internal models through engagement with the world.

This shift in understanding is particularly evident in studies of perception, which computational models inspired by brain function recast as an active, predictive process rather than a passive intake of sensory data. Here perception is conceived as dynamically updated patterns that enable anticipation, inference, and action. Representations capture the statistical regularities of an environment too complex or latent to be perceived directly. Crucially, they are not full encodings of the world, but compressed histories of interaction—informational shortcuts shaped by the demands of relevance, memory, and survival. Representation becomes a way of reducing uncertainty just enough to act, and of managing complexity through selective loss or compression.18

Compression involves reducing the amount of information needed to represent a signal by removing redundancy, minimizing unpredictability, or foregrounding only what is functionally relevant. In information-theoretic terms, a compressed signal conveys the same usable content as the original, but in a more efficient form. However, compression is never neutral. It encodes the priorities and constraints of the system doing the compressing. What gets discarded, and what gets preserved, depends on the needs and vulnerabilities of the agent. A system under pressure to act cannot afford to process everything; it must filter, simplify, reduce. In doing so, it transforms high-entropy, high-dimensional input into low-entropy, structured form. In this way, compression is not a distortion of reality but the very condition for making sense of it. Raw signals are sifted for what matters: each sensory pattern—sound, shape, smell, touch, taste—is tagged as noise, threat, or opportunity and used in the organism’s working model of the world. Meaning arises not from a full replica of reality but from entropic pruning: from the act of cutting away what does not matter, in order to preserve what does. Representations in this sense are not interpreted but used: their value lies in how effectively they compress sensory input, infer latent causes, and guide action in dynamic environments.19

This reconceptualization of representation as entropic pruning finds a powerful ally in evolutionary theory. From an evolutionary standpoint, perception is not designed to reveal the world “as it is,” but to extract patterns that are behaviorally relevant—those that enhance an organism’s ability to survive and reproduce. In this view, the internal world model an agent constructs is not a mirror of objective reality, but a compressed, efficient encoding of features that matter for action. As Henri Bergson and others have argued, evolution does not select for truth, but for utility.20 The perceptual systems of organisms are tuned not to the full richness of the world, but to the subset of distinctions that best serve a fitness function—a set of environmental pressures and constraints that define what counts as adaptive behavior.

This insight finds a striking parallel in the Ugly Duckling Theorem, first formulated by Satosi Watanabe. At first glance, the theorem seems absurd—almost metaphysically threatening—but it is mathematically rigorous and devastating in its implications. It proves that, without a predefined bias or relevance function, every possible classification is equally valid. That is, any two objects will share just as many properties, on average, as any other two.21 What this means, counterintuitively, is that no pattern is intrinsically more meaningful or “natural” than any other unless we first specify what matters. Even something as basic as identity—the ability to say that “this is the same as that”—requires a prior model of relevance.

This result unravels the intuition that categories and similarities are simply “out there” in the world, waiting to be discovered. Instead, it shows that all perception is a form of structured neglect: a principled ignoring of the vast majority of possible features in favor of those that serve a goal. From an evolutionary perspective, this goal is survival and replication; from an information-theoretic one, it is entropy reduction. Either way, the perceiving agent does not passively reflect the world—it filters it through constraints imposed by its fitness function, context, and historical interaction. Representations, then, are not maps of reality but compressive strategies: ways of carving relevance out of noise. Meaning is not recovered from the world; it is generated by the system that selects which distinctions matter for it to act on. In this sense, representation is the entropic pruning of possibility into usable form, a method for transforming boundless potential into actionable patterns.

This is precisely what organisms do when they coarse-grain the quantum foam of reality into stable, actionable perceptions: they collapse a bewildering microstate teeming with subatomic uncertainty into a macrostate that serves their survival. Just as temperature emerges from the average kinetic energy of countless particles, perception emerges from the selective compression of an overwhelming informational substrate into a few salient variables. What we call “the world” is not a neutral recording of physical reality, but a low-entropy projection shaped by evolutionary, cognitive, and computational constraints. A pragmatic hallucination that maximizes relevance while minimizing surprise.22

Strikingly, this dynamic conception of representation aligns with the principles of information theory. Claude Shannon's notion of entropy measures the uncertainty or unpredictability within a system. In this context, effective representations function as compressive patterns or models that reduce entropy by filtering relevant information from noise, thereby decreasing uncertainty. The precision of a representation correlates with its ability to minimize entropy, facilitating clearer predictions and more effective interactions with the environment. Representation from this perspective is not about fidelity to reality but fidelity to what enables prediction and control. Representation, in both perception and in words, is about patterns that reduce uncertainty.

This dynamic understanding of representation becomes especially vivid when we consider affect.23 Affect, at its origin, is not a word or a thought but a signal—a raw, embodied response to environmental or internal conditions. It manifests first in the body: a quickening pulse, a tightening chest, a sharp pain. These signals are rich, pre-linguistic data—multidimensional, situated, and deeply entangled with survival. They are not representations in the semiotic sense, but rather affective events: adaptive reactions that encode significance without the need for symbols.

Yet when we attempt to speak about affect—to say “I’m angry,” or “I’m afraid,” or “that really hurt”—we undertake a kind of translation. We compress that embodied signal into a representational form—a symbolic utterance, a discrete sign—that can be shared across minds. But something is lost in the process. The language does not transmit the feeling; it re-presents it, which means it simplifies, abstracts, and frames it according to a different logic. The dense, affective signal of the body is encoded into a lower-dimensional symbolic pattern.24

This gap between feeling and articulation is not accidental; it is structural. It reflects the same trade-off present in all representational systems: the need to reduce entropy and to compress the inchoate flux of experience into manageable, transmissible form. In information-theoretic terms, affect is high-entropy: full of uncertainty, nuance, and context. The spoken word is low-entropy: a clear signal, but at the cost of complexity. Representation, in this context, is not a mirror of affect but a model of it. A way of organizing internal states in ways that are legible to others and to oneself. In doing so, it makes possible reflection, coordination, and action, but it also introduces distortion.

And it is here, in this liminal space between sensation and articulation, that the concept of translation returns, not as metaphor, but as something we all live and experience. Because from this perspective, the act of giving voice to affect is a paradigmatic case of translation—not across languages, but across modalities. It is a movement from the embodied to the symbolic, from the private to the public, from the reactive to the representational. And just as no translation ever fully captures the original text, no description of an emotion can ever fully contain its source. This is not a failure of language but a condition of representation itself: to make meaning portable, it must be partial.

The implications of this are twofold. First, they reveal the limits of expression. The way every articulation necessarily excludes as much as it includes. And second, they highlight the generative potential of these limits. Because representation cannot fully exhaust its referent, it opens space for metaphor, approximation, and new forms of understanding. In this light, emotion is not merely something we feel and then express. It is something that becomes structured through the very act of representation. The signal becomes a sign, and in doing so, enters the recursive, predictive machinery of the agent’s symbolic world-model.

One objection to this line of thought is to argue that the limits of what can be represented are not simply functional and contingent, they are fundamental and built into the very nature of symbolic systems. In this line of argument, Gödel’s incompleteness theorem stands as a formal demonstration of the limits of symbolically structured systems and cited as proof that language is inherently incapable of capturing reality in its entirety. The argument, broadly construed, is that any formal system—like language, logic, or mathematics—is bound to leave something unsaid, some truths unprovable within its own framework. This idea is frequently enlisted to support a kind of epistemological humility: a reminder that symbolic systems are always partial, always haunted by what they cannot express.

But for this objection to work one is forced to presuppose that cognition operates as a symbolic logic, manipulating words according to fixed syntactic rules. As Joscha Bach argues, this interpretation misunderstands both Gödel and the nature of cognition.25 Gödel’s theorems apply specifically to formal symbolic systems: closed, rule-bound systems that manipulate discrete symbols according to syntactic laws. They do not apply to physical systems undergoing state transitions—systems like brains, organisms, or computational architectures. Physical systems evolve according to physical laws, not symbol manipulation rules, and are probabilistic and approximate, not deterministic and proof-based. From this perspective a cognitive system does not seek completeness in the Gödelian sense; it seeks functional adequacy—compressing experience, anticipating outcomes, maintaining coherence. Gödel’s limit simply doesn’t apply here.

But one might still argue that if cognition escapes Gödel as a functional and pragmatic system, subjectivity does not. We live inside formal systems—linguistic, social, legal—that impose constraints modeled on completeness and consistency. The disjunction between our embodied, adaptive minds and the rigid architectures we are forced to inhabit creates a friction that is epistemological, ethical, and existential. A computational response would be that while language, law, and social codes are indeed formal systems, they are embedded within, and parasitic on, deeper computational substrates.26 What you experience as “subjectivity” is the brain’s self-model: an emergent, generative process operating below the level of symbolic language. In this view, formal systems like symbolic language are not detached signs floating above the material world, they are approximations or compression schemes. They compress high entropy experience, as low entropy encoding of that experience. A word is not a pure abstraction; it is the residue of perception pruned to what matters. Each representation is a lossy summary of a deeper, richer, more chaotic stream. Language, in this view, is not a mirror of thought but a survival strategy: a way of modeling the world just enough to act within it.

Where classical theories draw a sharp line between representation and substrate,27 a so-called epistemic cut, an entropic model sees a gradient. Representation is not ontologically distinct from physical processes, they do not emerge from a metaphysical rupture, but from the ongoing regulatory work of predictive systems trying to stay alive and replicate. The “cut,” if it exists at all, is not a boundary inscribed in nature but a product of compression, a line drawn by a system attempting to reduce uncertainty just enough to maintain coherence. Meaning does not arise in spite of the loss of direct experience to representation, but because of it. Representation is not opposed to embodiment, it is its consequence.

Advancing our understanding of representation as a functional component of world models is imperative in the context of computational and cognitive sciences. This computational perspective underscores the active role of representations in shaping perception (both exteroception and interoception), guiding action, and enabling prediction. By embracing this dynamic framework, we can better comprehend and develop systems—both biological and artificial—that navigate and interpret the complexities of their environments with greater efficacy.

Modeling Meaning Statistically: The Vector Space Revolution

To understand how powerful this shift in perspective is it helps to look briefly at the history of machine translation. For decades, machine translation was built on the dream of a perfect transfer: a system of rules, grammars, and dictionaries that could map one language onto another with precision. These early models mirrored a classical understanding of language as a symbolic system: words corresponded to meanings, meanings to other words, and translation was the engineering of equivalence. But such systems stumbled when confronted with ambiguity, idiom, context, and drift—the very phenomena that entropy makes unavoidable. They assumed a kind of linguistic determinism that the reality of human language consistently undermined.

Here is where Benjamin’s perspective was quite prescient. For Benjamin, the goal of translation was not to preserve content, but to reveal the relational structure that connects languages across their differences. Meaning, in this view, is not fixed in words but moves among them and is subject to transformation, misalignment, and reconfiguration.

In the early 2010s, a profound shift occurred in machine translation which, remarkably, precisely captured Benjamin's relational structure. The advent of word2vec in 2013 marked a turning point in computational language modeling and, indirectly, in translation.28 Unlike earlier rule-based or symbolic systems, word2vec introduced a method for capturing semantic relationships between words based not on definitions, but on statistical proximity in large-scale language data. Words were represented as vectors in high-dimensional space, and their meanings inferred from the company they kept. The model did not “understand” language in the human sense; it simply learned to map relations, to encode the structured difference that emerges from use. Repurposing a saying about people you can think of this as, "You shall know a word by the company it keeps."

Crucially, this method revealed that linguistic meaning could be mathematically modeled through structured patterns of transformation. Words sharing similar contexts ended up closer together in vector space, and astonishingly, analogies like (king - man) + woman ≈ queen emerged. This was more than a computational trick, it suggested that meaning itself could be encoded as a geometry of transformation.

This breakthrough in modeling meaning as structured transformation also laid the groundwork for the development of large language models (LLMs), which took the core insight of word2vec and scaled it dramatically. LLMs like GPT are trained not only to embed words as vectors but to model sequences of language across vast corpora, predicting the next word in a sentence by internalizing the statistical structure of entire discourses. In doing so, they inherit and extend the logic that word2vec pioneered: that meaning arises not from fixed definitions but from dynamic relations—patterns of usage, substitution, and analogy across a linguistic field. The astonishing fluency of LLMs is not the result of a deep semantic grasp, but of their success in mimicking the way language differentially structures itself, echoing Benjamin's notion that meaning emerges not through direct correspondence but through networks of relational difference.

Indeed, the success of this approach to translation, first with word embeddings and now with transformer-based architectures, demonstrates the power of relational modeling.29 Neural machine translation systems now routinely outperform traditional methods, not because they "know" languages, but because they can navigate and reconstruct meaning across them via deeply learned relational mappings. In this sense, they enact a kind of Benjaminian translation: not a simple transfer of content from one language to another, but a reconfiguration of meaning through a shared latent structure. Translation becomes less about fidelity to an original and more about participating in a network of correspondences that lies beneath and between languages. Word2vec and LLMs reveal that language does not operate through rigid substitution, but through a field of structural resonance, where meaning is emergent, relative, and always in motion.30

This raises a provocative question: could machine computation, in its most advanced linguistic forms, be gesturing toward something like Benjamin’s pure language? Not in the sense of uncovering a universal language or divine truth, but as a kind of structural substrate, a latent space, where the intentions of different languages begin to align through their patterned divergences. In this view, models like word2vec or ChatGTP do not bring us closer to meaning as human consciousness experiences it, but they do bring us closer to a language of transformation—a logic of correspondence, drift, and resonance that echoes Benjamin’s shattered vessel metaphor. Machine computation, then, does not realize pure language, but it may approximate its form: not through interpretation, but through the geometry of vector space.

If Benjamin’s pure language is the unreachable totality toward which all languages aspire, machine learning systems are constructing probabilistic maps of that aspiration. Not as metaphysical truth, but as entropic structure. They reveal that meaning emerges not from isolated utterances, but from the relational topology of language as it moves, transforms, and recombines. In this way, machine computation does not rival human translation, it illuminates the structure within which translation, and perhaps even meaning itself, becomes intelligible.

Returning to Nature: Language as Cosmic Emergence

This returns us to the boundary between human language and the so-called “language of nature.” The Greeks imagined nature as physis—a dynamic system with its own inner logic. Later, the metaphor of the “book of nature” cast it as something to be read and interpreted. But what if this is not merely a metaphor? What if nature is linguistic, not because it has grammar or words, but because it obeys the logic of structured transformation?31 In this case, the act of speaking, writing, calculating, or theorizing is not outside nature and the cosmos—it is one of its emergent forms.

From this perspective, symbolic language is not an isolated human invention but a continuation of the same structural logic that governs the cosmos. Other systems—biological, physical, even geological—also transmit and transform patterned information across time. DNA encodes instructions for life; birdsong conveys territorial boundaries; tree rings record climatic history. These are not ‘languages’ in the narrow human sense, but they are systems of structured difference, embedded in matter, shaped by context, and governed by many of the same principles that underlie human language. Human language does not break this logic; it radicalizes it. Recursion, abstraction, and reference give our signals unprecedented combinatorial range, yet they still hitch a ride on the same thermodynamic gradient that coaxes every pattern to emerge, stabilize, and, in time, disperse.

Human symbolic language, in this view, is not an ontological leap, but an emergent refinement of forms already present in the fabric of the universe. Our most intimate expressions—our thoughts, emotions, and perceptions—do not float free from the material world; they are shaped by constraints and patterns we inherit, not invent. The grammars we speak with are emergent from deeper systems: the neural architectures sculpted by evolution, the sound waves constrained by physical media, the cognitive categories shaped by centuries of cultural sediment. Even the act of communication is embedded in the physical: speech is modulated breath, vibration in air, a ripple through molecules. These processes obey the same thermodynamic laws that govern everything from the decay of stars to the alignment of magnetic fields. This invites a radical reconsideration of “meaning” not as a mysterious surplus added by human minds, but as an emergent thermodynamic artifact: structured order arising temporarily within a universe tilted toward disorder. This isn’t to diminish our creativity or agency, but to situate them within a broader entropic field in which patterns emerge, persist, and dissolve according to constraints that are not reducible to human intention. Even at our most expressive, we are speaking through systems—linguistic, cultural, physical—that precede and exceed us. Language is not a transparent medium for conveying thought; it is one of the ways the universe structures difference.

This reframing lets us revisit Walter Benjamin’s notion of “pure language” from a thermodynamic angle. The second law propels closed systems like the universe towards maximal entropy, toward a heat-death horizon, an asymptotic macrostate with the greatest multiplicity of microstates. From constrained order to maximal diffusion. Benjamin’s latent unity, then, is not a metaphysical ideal but a name for this entropic drift that underwrites all transformation. The universe is far from mute: it speaks in radiation, decay, and the continual reconfiguration of matter. Entropy is more than a tally of disorder; it is a generative condition, the very field in which difference and communication arise. Human language, rather than defying natural law, is one of its most intricate consequences. The second law thus serves as the deep grammar of the cosmos, Benjamin’s “pure language” expressed in thermodynamic terms.

Of course entropy as a grammar and generative condition must be understood properly here. The Second Law of Thermodynamics is often misunderstood as if it describes a physical “force” pushing systems toward disorder. But entropy isn’t a physical force that causes change, the way gravity pulls or electromagnetism attracts. As Gibbs formalized it, entropy is simply a measure of probability. When we say entropy “increases,” we’re describing a statistical tendency—systems evolve toward configurations that are overwhelmingly more probable because there are vastly more of them. There are countless ways for particles to be scattered and relatively few for them to be neatly ordered.32

So while entropy does not “drive” change in the causal sense, it sets the stage on which all change unfolds. And because structure, meaning, and complexity are all born within, and eventually dissolved by, this probabilistic landscape, entropy can be seen not just as a measure of decay but as the very condition under which differentiation, transformation, and communication become possible. In this light, calling entropy a generative force or grammar is not to reify it as a mechanism, but to recognize that within the statistical logic of entropy, all differentiation and structure, including language, temporarily emerge.

Yet for difference to make a difference and become meaningful, for structure to be registered as language rather than noise, something else is required: a point of view, a system capable of interpreting the flux. Entropy may govern the cosmos, but it does not speak to anyone unless there is someone—or something—there to listen. With the emergence of life the entropic field acquires witnesses. The logic of the universe is no longer merely enacted but also perceived, modeled, and transformed. In this sense, the translator, or what science calls the observer, is not an afterthought to the system but one of its emergent properties: an agent whose role is to render difference legible, to turn the unfolding of physical law into the experience of meaning for someone or thing.

The Role of the Observer. Translator or Encoder?

Both Gibbs’ and Shannon’s models reveal that information cannot exist without context. Entropy is not a property of the world “out there”—it only becomes measurable when a system is in place to define what counts as signal, and what counts as noise. In this way, entropy, like the wave function in quantum mechanics, requires a perspective. The world does not interpret itself. Meaning requires an observer or agent. A boundary-drawer. A translator.

But here, too, we must be careful: an agent does not necessarily have to be “human” in any essentialist sense. Meaning emerges not from human uniqueness, but from the relation between form and interpretation. From the interaction between entropy and structure. The translator, the scientist, the historian, the organism, the algorithm—all are agents within the same informational economy, governed by entropy, producing distinctions, drawing inferences, sustaining structure. The “human” is not the privileged interpreter of the world, but one instance of a more general principle: the agent of entropic meaning-making. To cling to the human as a discrete category set apart from nature, exempt from entropy, is to misunderstand both what language is and what history is made of.

This brings us back to Benjamin. His notion of pure language was never about a universal tongue actually spoken by an individual, but about the underlying unity that all languages—and all translations—gesture toward but never reach. Just as meaning emerges in the interplay between information and interpretation, pure language arises only through the incompletion of all individual expressions. Benjamin’s translator, like Shannon’s decoder, is not merely a conduit but a constitutive site: meaning exists because of an agent’s intervention, their perspective, their imperfection.

To speak, to write, to remember, to model, these are not acts of capturing truth, but of shaping entropy into form. Meaning is not a residue left after communication, it is the active work of drawing distinctions, of translating difference into structure. And it is this labor of interpreting, translating, and structuring difference that allows systems, from cells to cultures, to resist disorder and persist across time.

But as we have shown, meaning depends on loss—on exclusion, reduction, simplification. To be meaningful, a signal must stand apart from what it is not. Yet this differentiation is never neutral. It is conditioned by the needs of the agent doing the interpreting. Consider a cell navigating its chaotic molecular environment: it cannot attend to everything. In order to survive, it must reduce uncertainty in ways that serve its homeostatic aims. A molecule like glucose becomes meaningful only when it is recognized as relevant, as a potential energy source, against a background of countless other particles that are ignored. This filtering is not incidental; it is structurally necessary. Meaning emerges not from the objective features of the world, but from the alignment between selective perception and embodied purpose. Without such constraint, without the drawing of boundaries that exclude alternatives, there is no distinction, no direction, no usable pattern. Meaning, in this light, is not just shaped by loss; it is made by it.

Benjamin’s pure language and Shannon’s information entropy converge around a single insight: that meaning arises from loss, and that loss becomes meaningful only through a perspective that registers it. Communication, in its deepest sense, is not about fixing meaning once and for all, it is about enduring transformation through the presence of an agent who can perceive, remember, and translate.33

In this view, language is not merely human. It is the universe expressing itself in structured difference and the agent, in whatever form it takes, is not outside this process, but constitutive of it. To speak, to write, to remember, to model, is to instantiate entropy into form. It is to participate in the transformation-content of the world.

And so we arrive near a conclusion: entropy is not the enemy of meaning—it is its medium. Science and history are not opposed, but parallel responses to the same condition: the need to preserve, transform, and transmit structure across time. But they are also invitations to rethink the boundaries we draw—between mind and matter, between signal and noise, between human and non-human. To follow entropy to its logical end is not to abandon meaning, but to see that meaning was never ours alone. It arises wherever transformation becomes legible.

We began by distinguishing between the life of history—a life animated by meaning, narrative, and transmission—and biological life, which science has traditionally grounded in material processes and mechanistic explanation. We have seen how thermodynamics and information theory blur this boundary. They reveal that both biological and historical life are structured by entropy, translation, and the fragile preservation of form through time. In both, what persists is not substance, but signal, not essence, but pattern.

This calls into question the very meaning of “life.” No longer reducible to matter or energy, life appears as an effort to resist entropy by encoding, exchanging, and transforming information. In this, life becomes translational. Here Benjamin unexpectedly comes to the fore. His notion of pure language casts a metaphysical light on the struggle: every act of translation, like every scientific model or historical narrative, is a fragmentary gesture toward an unreachable whole, a “pure” understanding spanning the improbable coherence seeded in the Big Bang and the uniform hush that looms at thermal equilibrium. Pure understanding is not a destination any single language can reach; it is the thermodynamic gradient between those poles that lends each translation its direction and urgency.

From this perspective, science itself emerges as a kind of translation—an effort to render the world intelligible, to carry signals across time and perspective, even while recognizing that something is always lost. In this sense, the task of science increasingly mirrors what Benjamin saw as the task of the translator: not to reproduce simple patterns, but to reveal the echo of a deeper thermodynamic structure, a latent truth that no single formulation can exhaust.

Thus, a new scientific paradigm must recognize its own translational condition. It must grapple not just with matter and measurement, but with meaning, entropy, and the limits of representation. Science, like history, is no longer the pursuit of final truths, but the ongoing attempt to preserve and transmit significance in a world that constantly threatens to erase it.

The task of the scientist, then, is not to discover a final truth, but to remain in dialogue with a world that is always transforming. If entropy is the condition for meaning, then the work of science, like that of the translator or the historian, is never complete. Every theory is a temporary structure, a scaffold built against collapse. Every equation, every model, every measurement is a response to uncertainty, a translation of the inarticulate drift of the cosmos into something momentarily stable. But stability is never permanent. Entropy ensures that what is structured will eventually unstructure, what is intelligible will become noise again. The universe does not yield itself once and for all. It requires continual listening, continual rearticulation.

And yet the scientist may rightly insist: “Science makes real progress. It enhances our ability to predict, to intervene, to reshape the conditions of life.” This is true and much of that progress has come from models built on control, objectivity, and separation. But just as often, science has advanced by expanding its view. The shift from genetic determinism to epigenetics revealed how environment and experience shape biology. Neuroscience moved beyond stimulus-response to view the brain as a prediction engine, modeling its world. Physics gave up the absolutes of Newtonian mechanics and embraced the observer-dependence of relativity and quantum theory. In each case, the boundaries of control gave way to more complex understandings grounded in emergence, relation, and contextuality.

In the same spirit what we are calling for is not a rejection of science, but a call to deepen it. Where the scientific method posits a sharp boundary between observer and observed, the entropic turn folds them into the same universal drift: observation is simply a local pruning of uncertainty within the entropic flow that carries both knower and known in a universe of ceaseless becoming. In this light what’s needed is a science that recognizes its power arises not from standing outside nature, but from participating in its unfolding. A natural science that recognizes that its own power arises not from pure objectivity, but from structured transformation, from the entropic relation between pattern and perspective. A science that widens its horizon beyond the paradigm of prediction and control, to include the dynamics of emergence, relation, and translation. Such a science might discover that true mastery lies not in domination, but in attunement: a yielding that sees in nature not a mechanism to be controlled, but a system of differences to be interpreted, structured, and restructured again.34

This is not a limitation—it is the logic of the task itself. There is no final code that captures reality, no pure message behind the noise. There is only the work: of building systems that hold, however briefly, and of remaining open to their failure. The scientist does not uncover a pre-given order; they participate in rendering order visible in a universe where transformation is the rule. If meaning emerges from the interplay between entropy and the agent who registers it, then science is the ongoing practice of making the world readable without ever exhausting it. Not a quest for certainty, but an attunement to difference, to degradation, to the fragile persistence of form. In the end, the task of the scientist is not to master entropy, but to make meaning within it and, in doing so, to glimpse a mastery that yields no less than it commands.

Postscript - Shannon Entropy Real World Applications

Let's look at two simplified examples that show how Shannon Entropy has real world application:

Example 1: Engineering – Efficient Data Compression

Imagine you're designing a system to send text messages. Each message contains letters and spaces. If you assign the same number of bits to each character (like using 8 bits for every letter and space, which is common in basic computer encoding), you end up wasting bandwidth as you send unnecessary bits of information in every text message. The reason is that some letters, like 'e' and 't', appear much more frequently than 'z' or 'q'. If you could figure out how to capture that frequency you can send fewer bits of information.

Shannon entropy helps us calculate the average information needed to represent each character, considering their frequencies. For example, if 'e' shows up 12% of the time, and 'z' only 0.1% of the time, the entropy calculation will reflect this.

Here’s a simplified illustration. Let’s say our text messages only use four characters: 'A', 'B', 'C', and 'D', and their probabilities of occurrence are:

'A': 50% (0.5)

'B': 25% (0.25)

'C': 12.5% (0.125)

'D': 12.5% (0.125)

The entropy, H, is calculated as:

H=−(0.5log2(0.5)+0.25log2(0.25)+0.125log2(0.125)+0.125log2(0.125))

This gives us an entropy of 1.75 bits per character. This means, on average, we only need 1.75 bits to represent each character, not the 8 bits we might have initially used.

So how do we encode the information to get closer to the 1.75 bits?

If you use a fixed-length code, you’d need 2 bits per character, because you have 4 characters:

A → 00

B → 01

C → 10

D → 11

That’s simple—but it doesn’t take advantage of the fact that A appears way more often than the others.

Smarter encoding: variable-length codes

A → 0 (shortest — most frequent)

B → 10

C → 110

D → 111

Now let’s compare

Say your message is:

“AABACADA”

Using fixed-length codes (2 bits each):

A → 00

B → 01

C → 10

D → 11

Encoded: 00 00 01 00 10 00 11 00 → 16 bits

Using variable-length codes:

A → 0

A → 0

B → 10

A → 0

C → 110

A → 0

D → 111

A → 0

Encoded: 0 0 10 0 110 0 111 0 → 12 bits

Savings: 16 bits → 12 bits

That’s 25% fewer bits just by using smarter encoding. As messages get longer and patterns become clearer, these savings grow.

Why this matters:

This is the practical power of entropy. Shannon entropy told us we only need about 1.75 bits per character on average for this distribution—not 2. With variable-length codes, we’re now much closer to that ideal.

This is the basic idea behind file compression (like .zip or .mp3): use fewer bits where you can, based on what’s most common.

This leads to much smaller text messages, saving bandwidth and storage. Engineers use Shannon entropy to design efficient compression algorithms, making digital communication faster and more effective.

Example 2: Computational Biology – Analyzing DNA Sequences

DNA is made up of four nucleotides: Adenine (A), Guanine (G), Cytosine (C), and Thymine (T). In certain regions of DNA, like those involved in protein binding, some positions are highly conserved (meaning they almost always have the same nucleotide), while others are more variable.